AI Virtual Assistants for Business: Enterprise-Ready, Microsoft-Powered

Introduction: Why AI Assistants Belong in Every Enterprise

In an era where knowledge workers are overloaded and digital tools are underutilized, AI virtual assistants offer something uniquely valuable: enterprise-grade leverage.

AI assistants aren’t just novelties or internal toys—they’re fast becoming strategic assets in modern organizations. By combining prompt engineering, natural language interaction, and integration with your existing Microsoft stack, AI assistants can streamline decisions, automate tasks, and surface institutional knowledge with the precision of a top-performing employee—on demand, 24/7.

This webpage is part of a broader framework we call AI Core Applications: modular, foundational uses of artificial intelligence that offer immediate value with low risk. Of those, AI assistants stand out as one of the most broadly applicable. Why? Because nearly every knowledge worker has repeatable tasks, complex decisions, or documented processes that can be supported—if not enhanced—by a well-structured assistant.

Unlike chatbots—which are usually narrow, rule-based, and rely on predefined dialog flows—AI assistants operate by prompting large language models (LLMs) with user input, dynamic context, and domain-specific knowledge. This enables them to answer open-ended questions, synthesize reports, generate content, and act as intelligent copilots for business operations.

Here’s what makes AI assistants especially relevant for mid-sized and large organizations that rely on Microsoft technologies:

- 🧠 Cognitive depth: They pull context from calendars, emails, files, databases, and external APIs to produce high-value answers—not just static responses.

- ⚙️ Modular design: They’re built using Microsoft’s enterprise-friendly tools like Semantic Kernel, Azure OpenAI, and Copilot SDKs.

- 🚀 Low-friction adoption: They run inside familiar environments—like Teams, Outlook, or custom .NET apps—without needing a cultural overhaul.

- 💼 Business alignment: They solve real problems: “Find our top 10 customers by margin this year,” “Summarize last week’s project status updates,” “Draft an onboarding email for new hires using our tone and policies.”

This guide will walk you through everything you need to know to turn AI assistants from a buzzword into a real working system inside your business. Whether you’re part of an innovation team, leading a digital transformation initiative, or just trying to reduce redundant tasks across departments, AI assistants are one of the fastest ways to deliver visible wins.

Let’s explore how to build, deploy, and scale them—using the Microsoft stack you already trust.

What Are AI Virtual Assistants?

AI virtual assistants are prompt-driven, context-aware tools that interface with large language models (LLMs) to perform tasks, answer questions, and support human decision-making inside your business. Unlike chatbots—which follow pre-programmed dialog flows—AI assistants interpret user intent, apply business context, and return dynamically generated responses based on natural language processing.

Think of them as on-demand copilots for employees. The user selects a task, enters relevant input variables (such as customer name, report type, or time range), and the assistant interacts with an LLM—often augmented with internal documents or APIs—to return a tailored output.

🔄 The Assistant Interaction Flow

1. Select task/prompt →2. Enter input variables →

3. Context added (user role, docs, data) →

4. LLM generates output →

5. Optional plugin action (e.g., send email, update system)

💡 Real-World Examples

- Financial Analyst Copilot:

Prompt: “Summarize this quarter’s revenue performance by product line”

Input: Excel file or Power BI dataset

Output: Bullet-point summary, executive brief, or Outlook email draft - HR Onboarding Assistant:

Prompt: “Draft a welcome message and task list for a new engineering hire”

Input: Role, team, start date

Output: Email with links to internal systems, policies, and team introductions - Sales Report Generator:

Prompt: “Top 10 deals lost last month with reasons”

Input: CRM data connector

Output: Summary of trends, customer objections, and internal action items

🧠 What Makes AI Assistants Different from Chatbots?

| Feature | AI Assistant | Chatbot |

|---|---|---|

| Purpose | Perform complex tasks, answer open-ended queries | Automate structured dialog flows |

| Engine | LLM (e.g., GPT via Azure OpenAI) | Rules, decision trees, FAQ lists |

| Input Style | Prompt + variables | Button/menu or typed inputs |

| Context | Pulls from documents, APIs, user role | Limited or no context |

| Output | Dynamic, personalized response | Predefined, static response |

| Use Case | Enterprise decision support, workflow augmentation | Simple customer service or scripted help |

AI assistants are dynamic, intelligent, and reusable modules for employee empowerment—not just tools for automating repetitive questions. Their utility scales with access to your organization’s structured and unstructured data.

🧩 Assistant Archetypes in the Enterprise

Here are the most common types of AI assistants, along with their typical use cases and Microsoft tools that support them:

| Assistant Type | Primary Use Case | LLM-Driven? | Microsoft Tech Stack | Deployment Method |

|---|---|---|---|---|

| Prompt Assistant | Repetitive document or email tasks | ✅ | Semantic Kernel, Azure OpenAI | Web or desktop |

| Analyst Copilot | Summarizing trends, KPIs | ✅ | Excel Copilot SDK, Power BI | Excel, Teams |

| Document Assistant | Search, summarize SharePoint files | ✅ | Azure AI Search + GPT | Teams, Outlook |

| Project Assistant | Track progress, draft updates | ✅ | Planner API + Semantic Kernel | Teams, web |

| Decision Support Tool | Evaluate proposals, prioritize leads | ✅ | RAG, custom plugins | Desktop app, browser |

AI assistants are not locked into a single department or workflow. Their modularity allows them to be reused across departments—often with only slight adjustments to their context or plugin set.

In the next section, we’ll examine the business case for deploying AI assistants, and why they represent one of the fastest paths to visible AI impact in your organization.

The Business Case for AI Virtual Assistants

Most organizations already have the tools, data, and workflows that AI assistants can amplify—but they lack a structured approach to deploy them. This is where the business case becomes clear: AI assistants offer fast wins with minimal risk. They solve real problems using assets you already have—documents, employees, and Microsoft tools.

For mid-to-large organizations, especially those invested in Microsoft technologies, AI assistants are a strategic multiplier that unlocks efficiency, consistency, and scale across knowledge work.

🧠 Why AI Assistants Work in the Enterprise

- Knowledge is stuck in silos.

Employees waste hours each week looking for the right document, template, or policy—even though it’s stored somewhere in SharePoint, Teams, or OneDrive. - Repetitive tasks eat up cognitive bandwidth.

Writing weekly reports, summarizing meetings, drafting emails, or onboarding new employees—these are routine, high-effort, low-creativity tasks. - Experts are bottlenecks.

One subject matter expert (SME) might be asked the same question 20 times a month. AI assistants let that knowledge scale without burning out key personnel. - AI assistants integrate where work happens.

Unlike chatbots or standalone tools, assistants can operate inside Teams, Outlook, Excel, and even internal .NET apps—no context switching required. - LLMs can now act, not just talk.

Using tools like Semantic Kernel, AI assistants can chain reasoning to actions—query a database, draft a report, email a summary, and log it to your system.

💡 AI Assistant ROI: Key Value Areas

| Area | Value Delivered |

|---|---|

| Time Savings | Assistants reduce time spent on writing, summarizing, and reporting by 30–70% |

| Consistency | Standardized outputs from prompts improve brand tone and operational accuracy |

| Scalability | One assistant can serve hundreds of employees with zero fatigue |

| Cost Control | Assistants run on scalable Azure compute; no headcount or long training cycles |

| Adoption | Employees get real help—not more tools to learn—leading to faster buy-in |

📋 Checklist: Signs Your Business Needs an AI Assistant

Use this checklist to determine whether your organization is ready to benefit from AI assistants:

✅ Employees repeatedly ask the same questions or request the same documents

✅ You have SharePoint folders no one remembers how to search

✅ Business units maintain their own templates for similar tasks

✅ Teams spend hours creating reports that follow the same structure weekly

✅ Decision-makers need data synthesized from multiple sources

✅ You’re over-reliant on key personnel to “translate” business knowledge

✅ You’ve invested in Microsoft 365, Azure, or Power Platform—but aren’t using AI yet

✅ You need fast, low-risk wins to prove AI value before full-scale rollout

If you checked 3 or more items, your business is primed for an AI assistant pilot.

🧩 Quick Win Use Cases to Start With

| Department | Assistant Idea | Sample Prompt |

|---|---|---|

| HR | Onboarding Assistant | “Create a task list and welcome email for a new QA hire starting next Monday” |

| Finance | Report Generator | “Summarize revenue vs. forecast by product line for Q2” |

| Sales | Proposal Optimizer | “Refine this draft proposal using our best practices and add 3 persuasive points” |

| Customer Success | Account Briefing Tool | “Generate a status summary for all accounts with open tickets over 7 days” |

| IT | License Optimization Assistant | “List all inactive Teams accounts over the last 90 days” |

The next section will show you exactly what features to look for in an enterprise-grade assistant—and how Microsoft’s ecosystem makes those capabilities available to your team.

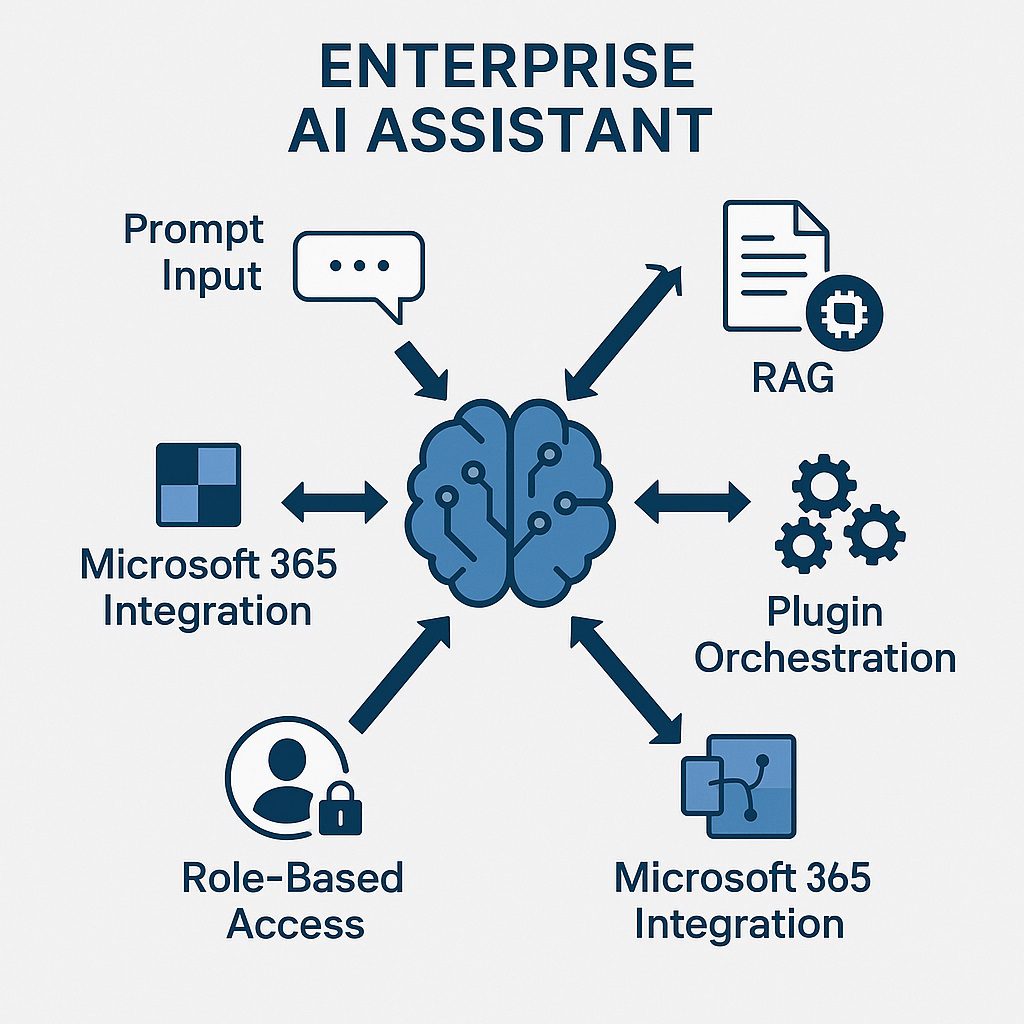

Core Capabilities of Enterprise AI Assistants

Not all AI assistants are created equal. For an assistant to succeed in a business or government setting, it must go far beyond generating a single answer from a static prompt. It must understand context, interface with enterprise systems, and take meaningful action based on user input and company knowledge.

Below are the foundational capabilities every enterprise-ready AI assistant should include—especially when built on the Microsoft stack.

1. 🧠 Prompt + Variable Input Handling

The assistant should allow users to:

- Select a predefined prompt (e.g., “Summarize this meeting transcript”)

- Provide structured input fields (e.g., file upload, project name, date range)

- Modify or override default prompt instructions

This structure makes the assistant usable by non-technical employees while still offering flexibility.

2. 🗂️ Contextual Awareness

Enterprise AI assistants should apply contextual layers to every request, including:

- User identity and department (e.g., marketing vs. legal)

- Recent conversations, tasks, or documents

- Access control settings (e.g., file visibility based on Teams membership)

Example:

An assistant summarizing a project brief for a product manager should know what projects that user is assigned to and avoid referencing irrelevant files.

3. 🔍 Retrieval-Augmented Generation (RAG)

To reduce hallucination and increase relevance, enterprise assistants should use RAG pipelines:

- Pull real-time data from internal sources (SharePoint, SQL, CRM)

- Index internal documents and files for semantic search

- Append results to the prompt before calling the LLM

This ensures the LLM response is grounded in factual, internal knowledge.

4. 🔗 Plugin and Skill Orchestration

Assistants become truly powerful when they’re not just informative, but actionable. That means calling backend services or triggering processes. With Semantic Kernel and Power Automate, AI assistants can:

- Fetch real-time data from APIs

- Send emails or meeting invites

- Update CRM records or project statuses

- Write to logs or dashboards

Assistants should be modular, using skills or plugins that correspond to business capabilities.

5. 🧾 Multi-Step Reasoning

A good assistant can break a problem down, reason through intermediate steps, and synthesize output. For example:

Generate a hiring summary from these resumes, compare them to the job description, and list the top 3 candidates.

This requires:

- Extracting key points from each resume

- Matching them to JD criteria

- Scoring and ranking results

- Formatting into a summary

Semantic Kernel’s planner functions support this type of chained task execution.

6. 🧑💼 Integration with Microsoft 365

A high-quality assistant should operate where the user already works:

- In Microsoft Teams as a messaging extension

- In Outlook as an add-in or Copilot plugin

- Embedded in Excel, Word, or PowerPoint via SDK

- Available in internal .NET portals as a sidebar or command tool

Microsoft’s SDKs, Teams apps, and Graph APIs make it seamless to deploy assistants inside the M365 ecosystem.

7. 🔄 Session Memory and Continuity

Assistants should be able to:

- Recall prior inputs and actions during the same session

- Track task state and resume interrupted workflows

- Store memory securely using Azure-backed persistence

This improves UX dramatically: users can interact more fluidly, like a conversation—not a reset-every-time interface.

8. 🔐 Role-Based Access Control (RBAC) and Governance

Enterprise assistants must honor user permissions and data governance:

- Only access files and systems the user is authorized for

- Audit every interaction for security reviews

- Log usage per department or individual for cost tracking

When built properly, assistants help enforce—not bypass—security.

9. 🗣️ Multimodal Input and Output (Optional)

While many assistants start with text interfaces, adding:

- Voice input/output using Azure Cognitive Services

- Structured form input for controlled data entry

- Visual outputs like graphs or annotated PDFs

…can expand the assistant’s reach.

✅ Summary Table: Enterprise AI Assistant Capabilities

| Capability | Description | Tools in Microsoft Ecosystem |

|---|---|---|

| Prompt + Input Handling | Structured task execution | Semantic Kernel, Adaptive Cards |

| Contextual Awareness | Tailor results by user/task | Microsoft Graph, SharePoint API |

| RAG | Ground answers in enterprise data | Azure AI Search, OpenAI |

| Plugins & Actions | Perform tasks, not just talk | Power Automate, Logic Apps |

| Multi-Step Reasoning | Chain logic and decisions | Semantic Kernel Planner |

| Microsoft 365 Integration | Operate in Teams, Outlook, Excel | Copilot SDKs, Graph API |

| Session Memory | Maintain assistant “state” | Azure Redis, SK memory stores |

| Access Control | Protect sensitive data | Azure AD, Microsoft Purview |

| Multimodal UX | Expand input/output options | Speech, Forms, Embedded Visuals |

Next, we’ll look at the Microsoft-powered tools you can use to build these assistants—without reinventing the wheel.

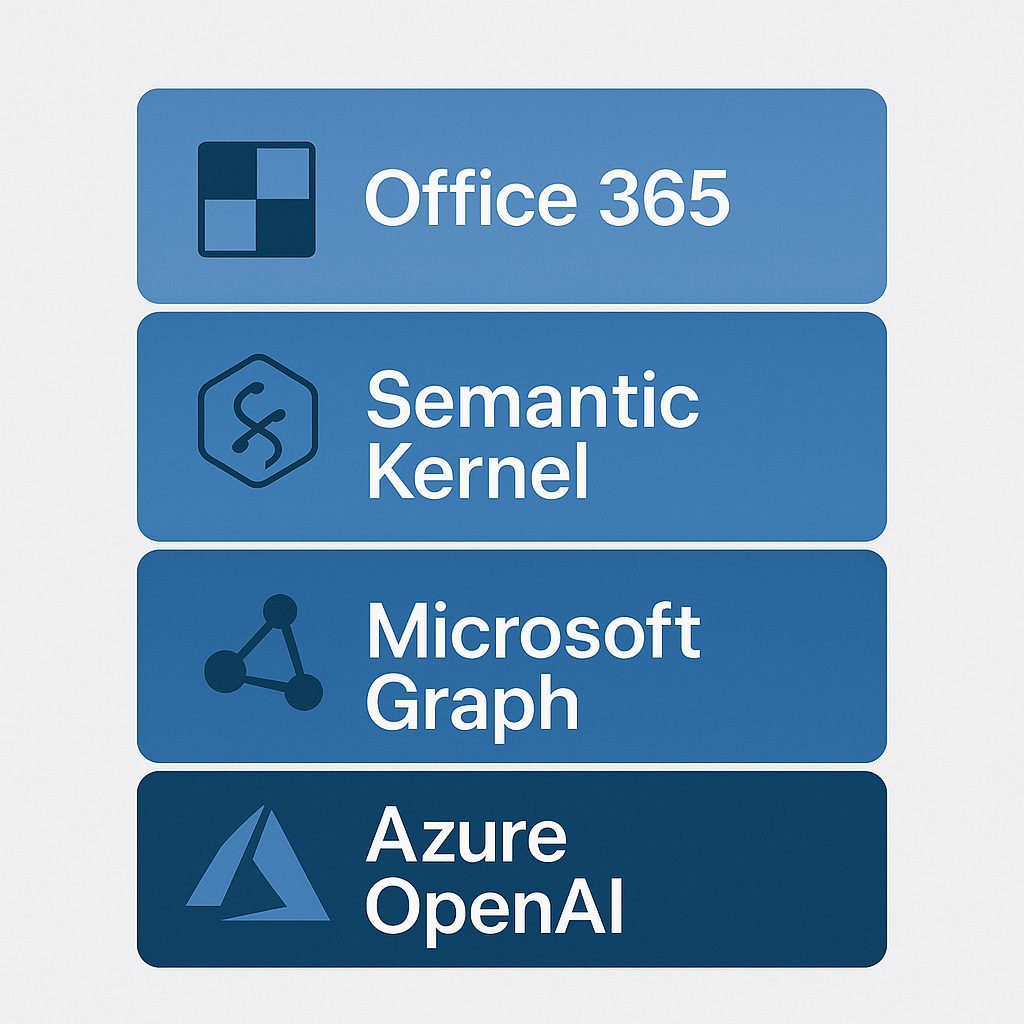

Microsoft-Powered Tools for Building AI Assistants

One of the biggest advantages for organizations running on Microsoft is that the infrastructure, APIs, and AI services you need to build AI assistants already exist. You don’t need to start from scratch. You need to orchestrate what’s already available.

Microsoft’s ecosystem—spanning Azure, Semantic Kernel, Microsoft 365, and Power Platform—offers a modular toolkit to create intelligent, secure, and scalable AI assistants that fit right into your environment.

Below is a breakdown of the key tools, when to use them, and how they fit together.

🧠 1. Azure OpenAI Service

What it does: Provides access to GPT models (e.g., GPT-3.5, GPT-4) hosted in Microsoft’s secure data centers

Why it matters: You get LLM power with enterprise-grade privacy, compliance, and network security

Ideal for:

- Generating responses from prompts

- Summarizing documents

- Producing long-form content (reports, emails, briefs)

- Natural language understanding (NLU)

Key Feature: Can be locked down to virtual networks or private endpoints, which is essential for regulated industries.

🧩 2. Semantic Kernel (SK)

What it does: An open-source SDK (C# and Python) for building AI agents that combine prompts, memory, and plugins

Why it matters: You can build assistants that reason, take action, and maintain state

Ideal for:

- Multi-step reasoning

- Plugin orchestration (e.g., fetch data, call APIs, send output)

- Building reusable AI skills and assistant templates

- Integrating AI into .NET applications

Key Feature: Supports planners to dynamically create task execution plans from natural language goals.

🔍 3. Azure AI Search (for RAG)

What it does: Semantic document indexing and vector search to retrieve relevant content for LLM grounding

Why it matters: Prevents hallucinations by giving GPT your data to work with

Ideal for:

- RAG pipelines (retrieval-augmented generation)

- Searching across PDFs, DOCX, Excel, and internal docs

- Custom knowledge base assistants

Key Feature: Combine search results with prompts for high-confidence, domain-specific answers.

📤 4. Microsoft Graph API

What it does: Gives your assistant secure access to Microsoft 365 data: Outlook, Teams, SharePoint, Calendar, etc.

Why it matters: Enables contextual awareness and real-time updates

Ideal for:

- Scheduling meetings

- Sending or drafting emails

- Searching shared documents

- Pulling team/project activity

Key Feature: Enables “Act As” behavior—your assistant can do what the user is authorized to do.

🧠 5. Copilot SDKs (Teams, Outlook, Word, Excel)

What they do: Allow you to embed and extend Microsoft’s own Copilot experiences inside productivity tools

Why it matters: Assistants feel native and frictionless

Ideal for:

- Adding custom logic to Word or Excel

- Tailoring assistants to department-specific tools (e.g., Excel for Finance)

- Creating Copilot extensions your staff already understand

Key Feature: Taps into familiar UIs and workflows—no retraining employees needed.

⚙️ 6. Power Platform (for Non-Developers & Action Flows)

What it does: Provides no-code/low-code automation tools, connectors, and UI builders

Why it matters: Enables rapid prototyping or lets business users build micro-assistants

Use selectively—for assistants built by or for technical users. Great for:

- Workflow integration (Power Automate)

- UI for input/output steps (Power Apps)

- Connecting to legacy systems

Key Feature: Can be embedded into Teams, SharePoint, or custom portals.

📊 7. Azure Functions / Logic Apps / Custom APIs

What they do: Execute backend business logic or secure system calls initiated by the assistant

Why it matters: Allows assistants to call trusted, auditable services rather than open-ended API calls from LLMs

Ideal for:

- Fetching sales or inventory data

- Creating or updating records

- Interfacing with ERP/CRM systems

Key Feature: Provides an enterprise-safe execution layer for assistant-triggered actions.

🧰 Reference Table: Microsoft Tools for Building Assistants

| Tool/Service | Role in Assistant | Key Use Cases |

|---|---|---|

| Azure OpenAI | LLM generation | Text output, summarization, NLU |

| Semantic Kernel | Reasoning + orchestration | Planning, plugin calls, multi-step logic |

| Azure AI Search | RAG pipeline | Contextual grounding, document retrieval |

| Microsoft Graph | Context + actions | Emails, meetings, files, user profile |

| Copilot SDKs | In-app deployment | Teams, Word, Excel, Outlook extensions |

| Power Platform | UI + automation | Forms, actions, business app interface |

| Azure Functions | Action execution | Secure data fetch, updates, integrations |

Microsoft has built this stack with enterprise needs in mind: compliance, security, identity integration, and scalability. Rather than trying to shoehorn consumer-grade AI tools into your organization, building on the Microsoft ecosystem ensures long-term alignment with your governance, licensing, and support models.

Next, we’ll walk through the step-by-step process of building a custom AI assistant in .NET using the tools we just covered.

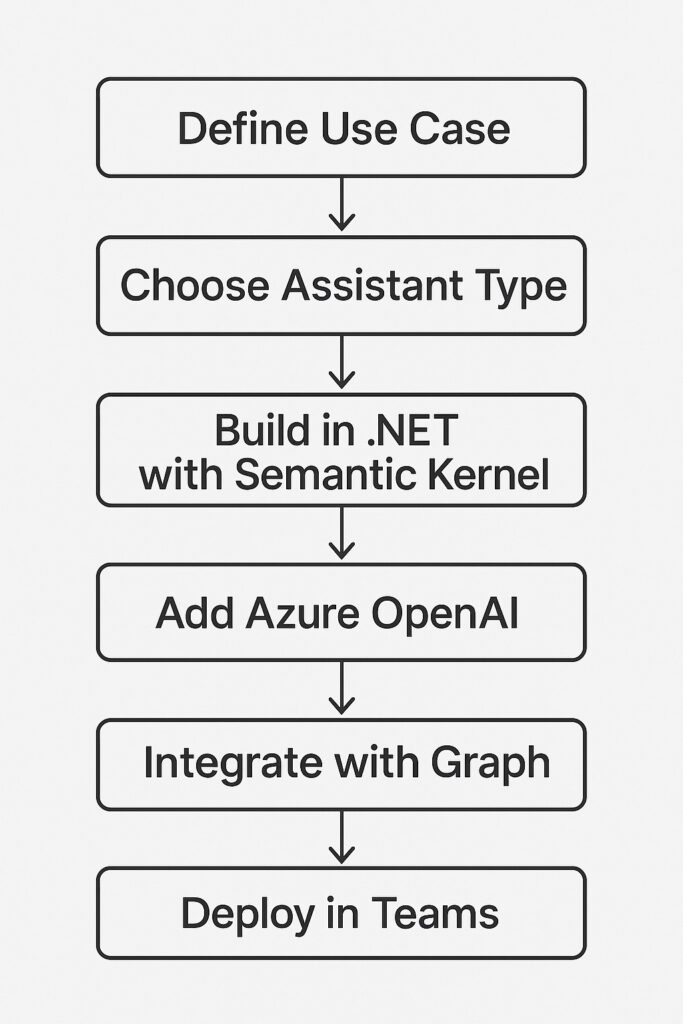

How to Build a Custom AI Assistant with .NET

Building an AI virtual assistant doesn’t require a massive data science team or a greenfield development project. If you already have a team of .NET developers and use Microsoft tools, you have everything you need.

The key is to think modularly: break the assistant down into prompts, context, logic, and outputs—then connect the pieces using Microsoft’s AI stack and best practices in software engineering.

This section outlines a pragmatic, repeatable development process for building enterprise-grade AI assistants using .NET, Semantic Kernel, and Azure services.

🛠️ Step-by-Step: Building a .NET-Based AI Assistant

1. Define the Business Need

Start by identifying a pain point or repeatable knowledge task in your organization:

- “Generate weekly client status updates”

- “Summarize SharePoint folders into briefs”

- “Draft customized emails based on CRM events”

Ask: What decision or task could be accelerated by having a smart assistant?

2. Choose the Assistant Type

Match the need to an assistant archetype:

| Business Need | Assistant Type |

|---|---|

| Repetitive writing or updates | Prompt Assistant |

| Data synthesis or trend spotting | Analyst Copilot |

| Document summarization | Document Assistant |

| Workflow recommendations | Task Advisor |

| Internal proposal evaluation | Decision Support Assistant |

Define inputs, prompt intent, and expected output format.

3. Set Up Semantic Kernel in Your .NET Project

Install Semantic Kernel via NuGet:

Install-Package Microsoft.SemanticKernel

Use SK to:

- Define prompt templates (e.g., summarization, report generation)

- Add memory storage (e.g., Redis, VolatileMemoryStore)

- Register skills and plugins for actions (e.g., send email, call API)

Structure your assistant as a plugin + planner + memory pattern, so it’s modular and extensible.

4. Add the Azure OpenAI LLM Integration

Configure Semantic Kernel with Azure OpenAI:

csharpCopyEditbuilder.WithAzureOpenAIChatCompletionService(

deploymentName: "gpt-4-enterprise",

endpoint: "https://<your-resource>.openai.azure.com/",

apiKey: "your-api-key"

);

Use semantic functions to define prompt behaviors:

csharpCopyEditvar summarizeFunction = kernel.CreateSemanticFunction("""

Summarize the following text in 3 bullet points:

{{$input}}

""");

5. Add Context with Microsoft Graph API

Use user identity and role to shape responses.

Example:

- Pull recent calendar events

- Use project team assignments from Teams

- Access user-specific SharePoint folders

This enables personalized, contextual responses like:

Summarize this week’s meetings for Jane in Product Management.

6. Implement Retrieval-Augmented Generation (RAG)

Build a pipeline that:

- Uses Azure AI Search to retrieve internal documents or indexed knowledge

- Feeds retrieved content into the LLM prompt as grounding material

This approach improves accuracy and ensures responses are based on your actual data.

7. Add Plugins and Actions

Enable assistants to act, not just reply.

Use:

- Power Automate or Logic Apps to run business workflows

- Azure Functions for API calls and secure logic

- Custom .NET plugins in SK to encapsulate business rules

Example:

- “Email this report to the regional manager”

- “Post project update summary to the Teams channel”

8. Design a Simple User Interface

Your assistant interface should:

- Present predefined task options (dropdowns, tiles)

- Let users input variables (e.g., name, time frame, file)

- Return results in readable or actionable format (text, PDF, buttons)

Deploy in:

- Teams (using Adaptive Cards and messaging extensions)

- Custom intranet or .NET web app

- Outlook add-in or Excel sidebar

9. Monitor, Audit, and Refine

Track:

- Usage metrics per department

- Output accuracy (via feedback loop)

- Plugin/API success rates

- Cost of Azure OpenAI tokens per call

Refine prompts, optimize token usage, and expand functionality iteratively.

🧩 Sample Architecture: .NET AI Assistant Blueprint

[User Interface] —> [.NET App or Teams Extension]

|

[Input Prompt + Variables]

|

[Semantic Kernel]

| | |

[LLM] [Graph] [Azure AI Search]

|

[Output: Text, Report, Action Triggered]

|

[Power Automate / Azure Function / Email API]

✅ Pro Tips for a Production-Ready Build

| Best Practice | Benefit |

|---|---|

| Use dependency injection for SK kernels and skills | Clean modular architecture |

| Log prompt tokens and completions | Cost monitoring and debugging |

| Validate input parameters | Prevent garbage-in, garbage-out |

| Separate logic into plugins vs. prompt templates | Easier maintenance and reuse |

| Implement retry logic and error handling | Production-grade reliability |

| Integrate RBAC and telemetry | Enterprise compliance and traceability |

This build approach lets your team create reusable assistant templates that can be cloned, customized, and scaled across departments.

Next, we’ll explore where AI assistants fit in your overall business strategy—and how to roll them out for maximum adoption and impact.

Where AI Assistants Fit in Your Business Strategy

AI assistants aren’t just a productivity boost—they’re a strategic interface between human expertise and organizational systems. When deployed effectively, they become a scalable layer of intelligence, improving decision-making, reducing friction, and accelerating operations across departments.

In this section, we’ll explore where assistants fit within your enterprise architecture and digital transformation roadmap—and how to prioritize their deployment for maximum business value.

🎯 1. Internal Productivity (The Fastest Win)

AI assistants shine when used to help employees:

- Generate reports or summaries

- Automate repetitive writing (status updates, meeting briefs)

- Search for and synthesize documents

- Fill out forms or complete checklists faster

- Personalize communication at scale

Use Case Example:

An operations assistant that generates weekly performance summaries from multiple Excel files and emails them to department heads—without human intervention.

Why It Matters:

These tasks drain time across every department. Assistants reduce friction and free up human capital.

2. Customer-Facing Enhancements (B2B and B2G Contexts)

Assistants can also support client- or citizen-facing functions without becoming full chatbots:

- Generate custom documents (e.g., quotes, proposals, info sheets)

- Answer internal FAQs that support customer service teams

- Tailor communications to different stakeholder groups

- Create briefings for account managers or field reps

Use Case Example:

A customer success assistant that generates custom post-meeting summaries and proposed next steps after client calls.

Why It Matters:

Clients feel seen, supported, and responded to—without additional staff workload.

🧠 3. Decision Intelligence and Analyst Support

One of the most underutilized areas: assistants can act as decision support copilots. They:

- Analyze structured data

- Compare options against business rules

- Surface insights from reports and trends

- Recommend priorities or actions

Use Case Example:

A procurement assistant that evaluates supplier quotes, flags risks based on contract terms, and recommends top 3 based on cost + reliability.

Why It Matters:

Middle managers and analysts become 2–3x more effective when routine evaluations are delegated to AI.

🔁 4. Workflow Integration and Automation

AI assistants can also serve as gateways to automation:

- Guide users through multi-step workflows

- Validate inputs before triggering backend systems

- Summarize complex form submissions

- Log completed tasks or trigger follow-up actions

Use Case Example:

An HR assistant that guides hiring managers through onboarding tasks (IT setup, orientation scheduling, access permissions) based on role templates.

Why It Matters:

Workflows become simpler, more consistent, and auditable—without the need for staff training or documentation hunting.

🧱 5. Foundation for AI-Driven Transformation

AI assistants provide a controlled, modular, and low-risk entry point to broader AI initiatives. Once assistants are accepted, you can expand:

- From LLMs to custom machine learning models

- From task assistants to decision agents

- From document summarization to knowledge synthesis

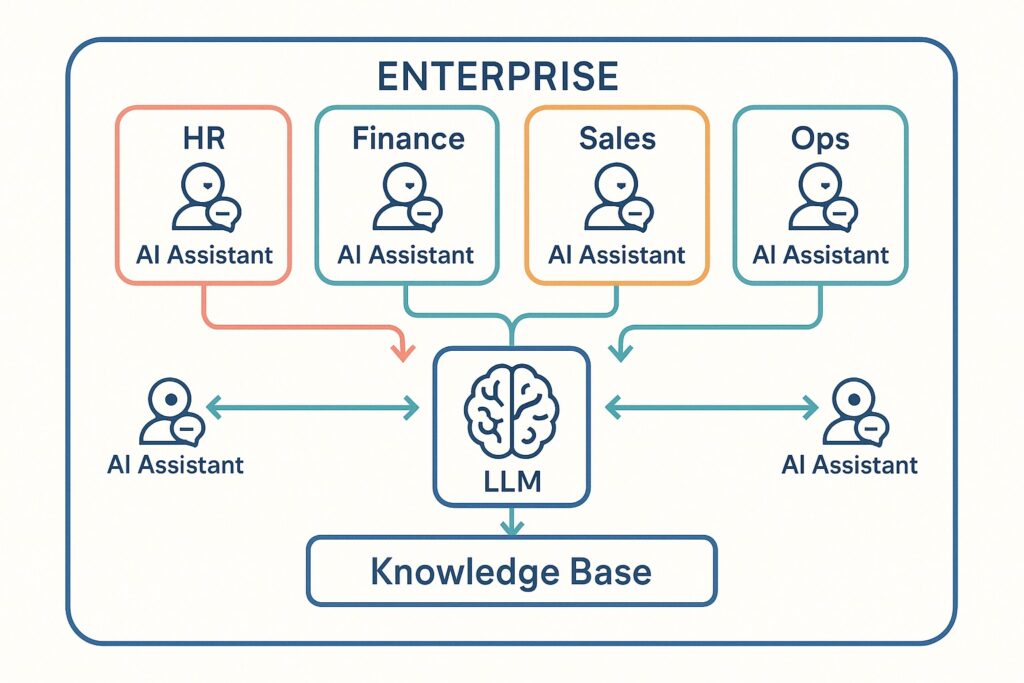

- From point solutions to federated assistants that coordinate across teams

Use Case Example:

Start with an assistant that summarizes contracts. Later, integrate it with risk models that flag clauses, and route to legal for escalation.

Why It Matters:

AI assistants are the on-ramp to enterprise AI maturity. They deliver early value while laying the groundwork for more advanced systems.

🧭 Deployment Strategy: Crawl, Walk, Run

| Phase | Objective | Example |

|---|---|---|

| Crawl | Deploy 1–2 assistants in low-risk areas | HR Onboarding, Weekly Reports |

| Walk | Expand to 3–5 departments with reusable templates | Finance Briefings, Project Updates |

| Run | Enable cross-functional assistants + custom workflows | Multi-department Copilots, Executive Dashboards |

🔁 Assistants as Reusable Enterprise Modules

Once assistants are live and refined, they become assets—like software components you can:

- Clone for new departments

- Customize for role-specific versions

- Expand with new plugins or prompt templates

- Chain together into AI workflows

Think of them not as projects, but as products.

Next, we’ll address the common challenges businesses face when deploying AI assistants—and how to mitigate them using Microsoft’s tools and enterprise software best practices.

Challenges and Mitigations

Deploying AI virtual assistants in an enterprise environment is not without risks. But those risks are predictable and manageable—especially when you leverage Microsoft’s ecosystem and apply sound engineering and governance principles.

This section highlights the most common technical, organizational, and strategic challenges—and how to address each using proven mitigation strategies.

⚠️ 1. Accuracy and Hallucination

The Problem:

LLMs like GPT may generate plausible-sounding but incorrect information—a phenomenon known as “hallucination.” This becomes especially dangerous when decisions are made based on fabricated or outdated data.

Mitigation Strategies:

- Use Retrieval-Augmented Generation (RAG) to ground LLM responses in internal, verifiable data sources (SharePoint, Azure SQL, documentation)

- Include confidence scoring and clear disclaimers in output (“Based on available data from Q2”)

- Design assistants to cite sources when presenting facts

- Limit open-ended prompts when precision is required (e.g., legal, financial summaries)

Microsoft Tools to Use:

Azure AI Search, Semantic Kernel with RAG plugins, GPT function calling + post-validation

🔐 2. Data Leakage and Security

The Problem:

AI assistants may access sensitive or confidential data, potentially exposing it to unauthorized users—or worse, external LLM providers if not configured properly.

Mitigation Strategies:

- Host LLMs in Azure OpenAI to ensure full enterprise-grade data protection

- Apply RBAC (Role-Based Access Control) to every assistant interaction

- Use Microsoft Graph and Azure AD to enforce identity-based filtering

- Deploy assistants with virtual network isolation or private endpoints

- Never send sensitive prompts to public APIs

Microsoft Tools to Use:

Azure OpenAI (private deployment), Azure AD, Microsoft Purview, Microsoft Graph with delegated permissions

🤖 3. User Adoption and Trust

The Problem:

Even the most well-built assistant won’t be used if employees don’t trust it or find it too cumbersome. Assistants that feel like “just another tool” will get ignored.

Mitigation Strategies:

- Deploy assistants inside familiar platforms (Teams, Outlook, Excel)

- Design assistants to solve real daily pain points—not generic tasks

- Use feedback buttons to gather user sentiment and improve

- Run internal demos and use champions to build trust

- Limit assistant scope at first—then expand after proven value

Microsoft Tools to Use:

Copilot SDK, Teams Messaging Extensions, Adaptive Cards, usage telemetry via Application Insights

🧩 4. Integration Complexity

The Problem:

Integrating AI assistants with business systems like ERPs, CRMs, file stores, and databases can become tangled—especially without clean APIs.

Mitigation Strategies:

- Start with simple API-based systems or use Graph for M365 access

- Use Power Automate for lightweight integration with legacy apps

- Wrap custom logic into Azure Functions or .NET plugin classes

- Treat each integration as a modular skill—reusable across assistants

- Create a shared internal API gateway for common data lookups

Microsoft Tools to Use:

Power Platform connectors, Azure Functions, Microsoft Graph, REST APIs, Semantic Kernel plugins

💰 5. Cost Management (Especially Token Usage)

The Problem:

High token usage in LLMs—especially with long prompts or frequent calls—can drive up Azure OpenAI costs if left unchecked.

Mitigation Strategies:

- Use smaller models (e.g., GPT-3.5 instead of GPT-4) for lightweight tasks

- Limit response length and truncate unnecessary input text

- Cache common outputs when possible

- Log and analyze token usage by prompt and department

- Provide token budgets per assistant or team

Microsoft Tools to Use:

Azure OpenAI usage logs, prompt engineering best practices, Semantic Kernel with token management helpers

⚙️ 6. Governance and Change Control

The Problem:

AI assistants may evolve rapidly—changing prompts, actions, or context—without oversight, creating inconsistency or risk.

Mitigation Strategies:

- Use version control for prompt templates and skills

- Implement a QA process before publishing new assistant functionality

- Create a governance policy for assistant roles, capabilities, and usage

- Monitor assistant changes like software releases—complete with changelogs

Microsoft Tools to Use:

GitHub (for prompt source control), Azure DevOps pipelines, custom admin dashboards

🧪 7. Testing and Evaluation

The Problem:

Testing an AI assistant isn’t like testing traditional software—there are no deterministic outcomes, and success is often subjective.

Mitigation Strategies:

- Use scenario-based testing with real user inputs

- Implement automated response validation for structured outputs

- Gather end-user feedback loops inside the assistant interface

- Measure assistant quality with metrics like response relevance, time saved, and satisfaction

Microsoft Tools to Use:

Playwright for UI testing, test harnesses for SK, user feedback buttons in Adaptive Cards

✅ Summary: Mitigation Matrix

| Challenge | Key Microsoft Tools | Primary Strategy |

|---|---|---|

| Accuracy | Azure AI Search, RAG | Ground responses in enterprise data |

| Security | Azure OpenAI, RBAC | Restrict access and isolate systems |

| Adoption | Teams, Copilot SDK | Build inside familiar tools |

| Integration | Power Automate, Graph, SK Plugins | Modular connectors to systems |

| Cost | Token tracking, SK logs | Optimize prompt engineering |

| Governance | GitHub, Azure DevOps | Version control + QA |

| Testing | Manual + automated flows | Scenario testing and feedback loops |

With proper architecture and planning, most assistant risks can be minimized to near zero—making them one of the most cost-effective, secure, and governable AI initiatives you can launch in your organization.

Next, we’ll explore how to scale AI assistants across your enterprise while keeping architecture consistent and costs manageable.

IX. Scaling AI Assistants Across the Enterprise

One AI assistant might save your team a few hours a week. But ten assistants deployed across five departments? That’s when you start transforming how your business operates. At scale, assistants become part of your organization’s operational muscle—an internal network of intelligent agents that handle the busywork, organize the chaos, and support better decisions.

Scaling isn’t just about building more assistants—it’s about building a repeatable framework so that each new deployment is faster, cheaper, and more aligned with strategic goals.

🧱 The Foundation: Templates, Standards, and Patterns

Before scaling, standardize the core components:

| Element | Standardization Tip |

|---|---|

| Prompt Templates | Store and version them in GitHub or a shared prompt library |

| Plugins and Skills | Create modular .NET classes or Semantic Kernel skills per function (e.g., “GetClientList”, “SummarizeMeeting”) |

| UI Patterns | Use Adaptive Cards or Razor components to create consistent input/output experiences |

| Security Profiles | Define assistant RBAC policies by department or use case |

| Telemetry | Log usage, response time, and token cost with Application Insights or custom dashboards |

Result: Future assistants can be cloned, modified, and launched without rebuilding the wheel every time.

📊 Phase Strategy: Crawl, Walk, Run

Start with low-risk pilots, then scale horizontally and vertically.

| Phase | Objective | Examples |

|---|---|---|

| Crawl | Prove value in 1–2 departments | HR onboarding, Finance weekly reports |

| Walk | Expand assistant library, build governance | Sales proposals, Project updates, Legal summarization |

| Run | Orchestrate assistants across departments, integrate with data lakes or enterprise systems | Multi-agent workflows, enterprise dashboards, voice-enabled assistants |

🔁 Horizontal vs. Vertical Scaling

Horizontal scaling means deploying similar assistants across departments:

- A report generator used by HR, Finance, and Ops

- A document summarizer for Contracts, Legal, and Risk

- A status briefing assistant for Sales, Support, and Projects

Vertical scaling means deepening assistant capabilities:

- Adding plugins to perform actions (e.g., log to CRM, post to Teams)

- Integrating data analytics or ML scoring

- Expanding context memory and assistant history

- Evolving into autonomous multi-agent workflows

🧠 Assistant Federation (Advanced Pattern)

As you scale, assistants can start talking to each other or cooperating on shared objectives:

- A Project Assistant pulls status from Team Leads’ assistants

- A Compliance Assistant queries HR and Legal Assistants for document traces

- A Proposal Assistant requests pricing data from a Finance Assistant

Use Semantic Kernel planners or orchestrator services to manage inter-assistant logic.

🚀 Deployment Options for Scale

| Method | Best For | Tools |

|---|---|---|

| Microsoft Teams Apps | Internal rollout, employee interaction | Teams SDK, Adaptive Cards |

| Outlook/Excel Add-ins | Productivity integration | Office.js, Copilot SDK |

| .NET Web Portals | Internal tools or admin-facing apps | Blazor, ASP.NET Core, SK |

| Edge Widgets or Browser Extensions | Light-weight usage or assistive overlays | JavaScript + SK APIs |

| Mobile App Interfaces | Field ops, inspections, logistics | MAUI + SK, or PWA + REST |

📈 Metrics to Track as You Scale

Define clear KPIs to evaluate impact and adjust resources:

| Metric | What It Measures |

|---|---|

| Usage Frequency | How often assistants are used (by department or user role) |

| Time Saved | Based on task benchmarks (e.g., reports that used to take 45 minutes) |

| Adoption Rate | Assistant usage vs. eligible users or teams |

| Output Quality Feedback | Thumbs-up/down or satisfaction surveys |

| Token Spend | Azure OpenAI token costs per task or department |

| Plugin/API Utilization | Tracks how often assistants trigger backend logic |

🛠 Platform Infrastructure Tips

To ensure scalable operations:

- Host assistants as microservices, each with its own API or interface layer

- Share plugin libraries across assistants to minimize duplication

- Use Azure Function Apps for serverless backend logic (auto-scale support)

- Implement SK Planners for complex workflows and fallback handling

- Add admin dashboards for prompt version control, token usage, and assistant health

🧩 Organizational Playbook

Create an internal playbook with:

- Approved use cases per department

- Templates for prompts, Adaptive Cards, and UIs

- Best practices for prompt engineering

- Security review checklist

- Pilot-to-production deployment guide

Empower both developers and business units to request or customize assistants—without waiting for IT.

Scaling AI assistants isn’t just technical—it’s cultural. By creating an ecosystem where assistants are useful, usable, and trustworthy, your organization will naturally adopt more of them. And each one becomes another multiplier in your digital transformation.

Up next, we’ll share some case studies and practical examples that illustrate what AI assistants look like in the real world—and the ROI they deliver.

X. Case Studies and Examples

By this point, the value of AI virtual assistants in enterprise settings should be clear—but nothing drives the point home like seeing it in action. Below are a mix of fictional composite case studies and real-world public examples that illustrate how AI assistants perform in the wild, across industries and roles.

📚 Fictional Example #1 – Mid-Sized Manufacturing Company

Company: NorthRiver Components

Size: 1,100 employees

Stack: Microsoft 365, Dynamics 365, Azure

Problem:

Project managers spent 3–4 hours each week manually writing project update reports and preparing for executive status meetings.

Solution:

A custom AI assistant was built in .NET using Semantic Kernel. It:

- Pulled project plans and team notes from SharePoint

- Summarized milestones, risks, and progress

- Drafted weekly email updates for stakeholders

- Offered follow-up recommendations based on missed deadlines

Result:

- 70% reduction in reporting time

- Consistency across all departments

- Improved leadership visibility into project delays

- ROI realized within 3 weeks of deployment

💼 Fictional Example #2 – Government HR Department

Agency: State Dept. of Public Infrastructure

Size: 600+ employees

Stack: Microsoft 365 Government Cloud

Problem:

New employee onboarding required a 22-step manual checklist across IT, HR, Security, and Facilities—often leading to missed steps and slow starts.

Solution:

An AI assistant was embedded in Microsoft Teams that:

- Took the employee role as input

- Generated onboarding instructions for each stakeholder

- Pre-filled forms based on Active Directory and job description

- Posted updates to responsible departments

Result:

- Onboarding time cut from 10 days to 4

- Zero missed compliance steps

- Assistant reused across 12 different job roles

📈 Fictional Example #3 – Financial Services Firm

Company: ApexInvest Analytics

Size: 300 employees

Stack: Azure, Microsoft 365, Power BI

Problem:

Analysts needed to write quarterly reports summarizing customer portfolios—a time-consuming process involving Excel exports, notes, and PowerPoint.

Solution:

A custom “Analyst Copilot” was built using:

- Azure OpenAI + Semantic Kernel

- Power BI Embedded

- Plugins to summarize trends and flag outliers

Result:

- Report generation time dropped from 3 hours to 20 minutes

- Analysts could scale to 2x–3x more client accounts

- Managers received better insights, faster

🧠 Real-World Example #1 – Microsoft Copilot for Excel

Use Case:

Microsoft Excel’s integrated Copilot is a functional assistant that:

- Understands natural language queries

- Summarizes trends and insights

- Automatically generates charts and calculations

Example Prompt:

Summarize Q2 revenue growth by region and highlight any underperforming segments.

Impact:

Reduces the need for complex formula building or pivot table setup—empowering even non-technical users to perform advanced analysis.

🗃️ Real-World Example #2 – EY + Microsoft Collaboration

Use Case:

Ernst & Young (EY) partnered with Microsoft to develop AI assistants for legal contract review and financial auditing.

Outcome:

- Legal assistant analyzed 30,000+ contracts for compliance risks

- Audit assistant flagged anomalies in large financial datasets

Tech Stack: Azure OpenAI, Semantic Kernel, Power Platform

Result:

- Saved thousands of hours in manual review

- Reduced error rates

- Enabled higher-value human audit work

🗣️ Real-World Example #3 – Accenture’s Internal Assistants

Use Case:

Accenture has built more than 20 internal AI assistants for everything from HR support to internal IT ticket handling.

Key Features:

- LLM-powered interaction

- Embedded in Microsoft Teams

- Designed for both structured and unstructured tasks

Result:

- Assistants handled 50–60% of Tier 1 support queries

- Reduced employee wait times

- Improved satisfaction without increasing staff

💰 ROI Snapshot: Common Assistant Wins

| Metric | Typical Outcome |

|---|---|

| Time Saved | 30–70% reduction in repetitive tasks |

| Employee Capacity | 2–3x more throughput on analysis or documentation |

| Consistency | Uniform formats and fewer errors across reports |

| Cost | Often <$200/month per department on Azure usage |

| Deployment Time | First assistant in production within 2–4 weeks |

🧩 Lessons Learned Across Case Studies

- Start small but plan for scale. Early pilots that are too narrow may not show systemic value—but too big and they’ll stall. Aim for 1 assistant per high-friction process.

- Involve end users in the loop. The best assistant designs came from the people doing the work—not just IT or leadership.

- Ground everything in your data. The most useful assistants were built around internal knowledge and document repositories—not just general intelligence.

- Treat assistants as products, not prototypes. Add testing, feedback loops, and metrics tracking from day one.

These examples show that AI assistants are not theoretical. With Microsoft’s ecosystem and a solid .NET foundation, they are a practical, scalable way to drive enterprise impact today.

Next, we’ll wrap up the guide with a summary and actionable recommendations for your organization.

XI. Summary and Recommendations

AI virtual assistants are no longer a futuristic concept—they’re ready now, and they’re reshaping how work gets done in Microsoft-based enterprises. They represent one of the most accessible, modular, and strategic forms of applied AI, especially when built using technologies your team already understands: .NET, Azure, and Microsoft 365.

🧠 Recap: Why AI Assistants Matter

- They’re versatile. AI assistants support reporting, summarization, task coordination, onboarding, decision-making, and more.

- They’re powerful. When integrated with Microsoft Graph, Semantic Kernel, and Azure AI, they can reason, act, and personalize responses at scale.

- They’re fast to build. Using the Microsoft ecosystem, your team can deploy a pilot in weeks—not quarters.

- They’re trusted. With enterprise-grade identity management, private LLM hosting, and secure plugin integration, they meet compliance and governance needs.

🧭 Where to Start

You don’t need a 12-month roadmap to get value. You need one assistant that works—in a real department, solving a real problem. Here’s a practical rollout plan:

🔹 Step 1: Identify a High-Friction Task

Look for repetitive knowledge work:

- Weekly reporting

- Document summarization

- Email drafting

- Data-driven decision support

🔹 Step 2: Build a Lightweight Assistant

Use Semantic Kernel in a .NET app. Start with:

- 1–2 prompt templates

- A small set of input variables

- Internal data sources (SharePoint, Excel, Teams)

🔹 Step 3: Deploy in a Familiar Interface

Deploy in Microsoft Teams, Outlook, or your company portal—where the user already works.

🔹 Step 4: Monitor and Improve

Track:

- Usage metrics

- Time saved

- Cost per call

- User satisfaction

Refine prompts, expand capabilities, and replicate the assistant elsewhere.

📦 Your Toolkit

| Resource | Purpose |

|---|---|

| Semantic Kernel | Reasoning and plugin orchestration |

| Azure OpenAI | Secure LLM access |

| Azure AI Search | Grounding via RAG |

| Microsoft Graph | Contextual access to M365 data |

| Power Automate / Azure Functions | Backend integration and workflows |

| Teams / Outlook SDKs | Embedded assistant experiences |

| GitHub + Azure DevOps | Prompt versioning and CI/CD |

🔁 From One Assistant to an Ecosystem

Treat your first assistant like a prototype. But treat your second like a platform:

- Use standardized templates

- Build modular plugins

- Apply consistent governance

- Collect cross-department feedback

With this approach, you’ll create an ecosystem of reusable, intelligent tools that support—and scale with—your organization.

✅ Final Recommendations

| Goal | Recommendation |

|---|---|

| Fast ROI | Start with a narrow, repeatable use case |

| Enterprise trust | Host in Azure and integrate RBAC from the start |

| Scalability | Build assistants as modular products, not monoliths |

| Adoption | Deploy inside Microsoft 365 tools with high user traffic |

| Cost control | Use token-efficient models and monitor usage weekly |

| Future-readiness | Treat assistants as stepping stones to autonomous agents and AI-powered systems |

AI virtual assistants aren’t just helpful—they’re the gateway drug to deeper AI transformation. Start small, build smart, and scale with confidence.

Next up: we’ll finish this flagship resource with a deeply useful, SEO-optimized FAQ section designed for clarity, action, and discoverability.

Frequently Asked Questions (FAQs)

What is the difference between an AI virtual assistant and a chatbot?

AI virtual assistants are LLM-powered tools that handle complex, variable tasks using natural language and dynamic context. Chatbots typically follow rule-based flows with limited scope. Assistants focus on knowledge work; chatbots often serve customer support or lead capture.

Can I build an AI assistant without advanced AI expertise?

Yes. If you have .NET developers and use Microsoft tools, you can use Semantic Kernel, Azure OpenAI, and Graph APIs to build powerful assistants without needing a data science team.

How long does it take to build an AI assistant for internal use?

Most basic assistants can be prototyped in 2–4 weeks. With Microsoft’s ecosystem, you can rapidly assemble working solutions using existing systems, prompts, and modular plugins.

Is Azure OpenAI secure enough for enterprise use?

Yes. Azure OpenAI offers enterprise-grade security, compliance, private endpoints, and full integration with Azure AD and Microsoft governance controls.

What tools are required to build an AI assistant in a Microsoft environment?

Core tools include Semantic Kernel (.NET SDK), Azure OpenAI, Microsoft Graph, Azure AI Search, Power Automate, Azure Functions, and the Microsoft 365 Copilot SDK.

How do AI assistants access internal documents or data?

Assistants can use Azure AI Search with RAG (Retrieval-Augmented Generation) to pull relevant content from SharePoint, OneDrive, or databases and inject it into LLM prompts.

Can I control what data the assistant can access per user?

Yes. Role-Based Access Control (RBAC) and Microsoft Graph ensure that assistants can only access data the user is already authorized to see, based on Azure AD identity.

What’s the best interface for deploying an internal assistant?

Microsoft Teams is ideal for quick deployment and high visibility. Assistants can also be embedded in Outlook, Excel, web portals, or as browser extensions, depending on the use case. With .NET you can create interfaces for Web, desktop, mobile, and API.

How do I keep token costs under control when using Azure OpenAI?

Use GPT-3.5 when possible, truncate long inputs, cache frequent responses, and monitor token usage by prompt and department. Azure provides detailed cost breakdowns.

Can AI assistants take actions like sending emails or updating databases?

Yes. Assistants can trigger actions via plugins or workflow tools like Power Automate, Azure Functions, or custom .NET APIs—moving from “copilot” to “co-worker.”

Do I need to train my own model?

No. Azure OpenAI provides access to pre-trained models like GPT-4. You simply apply prompts, context, and plugins to tailor it to your business needs—no training required.

How do I test and validate assistant responses?

Use scenario-based testing with real user inputs. Validate output accuracy, token efficiency, and user satisfaction. You can also add feedback buttons in the assistant UI.

What departments benefit most from a first assistant deployment?

HR, Finance, and Operations are ideal starting points. Common wins include onboarding automation, weekly report generation, and summarizing documents or data.

Can I reuse assistant components across departments?

Absolutely. Prompt templates, plugins, and UI components can be cloned and customized per department, creating an internal library of reusable assistant “skills.”

Is this only for large enterprises, or can mid-sized businesses benefit too?

AI assistants are especially valuable for mid-sized organizations with limited staff. They scale internal expertise, reduce bottlenecks, and enable leaner operations without adding headcount.