In most Microsoft-based environments, software development has followed a well-defined formula for decades: gather requirements, write code, run QA, deploy. And it’s worked. Teams are established. Roles are clear. Quality Assurance (QA) ensures the code meets the requirements. The legal department steps in when there are contracts or compliance checkboxes. And if the app crashes, the logs will tell you what went wrong.

But now AI has entered the picture. And that old formula is dangerously incomplete.

Artificial Intelligence introduces a black-box element into otherwise structured systems. When an app makes an AI-based decision—like approving a mortgage or recommending a healthcare treatment—the reasoning isn’t coded in business logic. It’s learned. Which means it’s also harder to test, harder to justify, and much harder to defend.

It’s time to add a new layer to the QA process: AI ethics and bias testing for Microsoft environments.

Why Ethics Testing Hasn’t Been Standard Practice

In traditional software, it’s easy to trace decisions:

- If a developer hardcodes a bias into pricing logic, you can see the line of code.

- If the system always rounds up costs, someone approved that rule.

- If a bug impacts customers unfairly, QA finds it, and it gets fixed.

AI changes that. You train a model. You call it from your .NET API. You serve results to users. And when those results are biased, discriminatory, or legally questionable, there’s no obvious smoking gun.

That’s why traditional QA teams aren’t equipped to test ethical outcomes. It’s not part of their scope—yet.

The Legal Exposure Is Real in AI-Driven Systems

Let’s say your AI-based insurance pricing system ends up charging one demographic group more than another. If that group overlaps with a protected class (race, gender, disability), regulators could step in.

Even if you didn’t intend to discriminate, your system did.

But if you can show that your team tested for bias, logged the results, and included the legal team in reviewing edge cases, then the problem looks like poor execution—not malice. That distinction can save you millions in court.

AI Ethics Is a QA Problem Now

In Microsoft environments, QA is already a mature, structured discipline. So why not expand it?

- Add ethical test cases.

- Define fairness requirements alongside functional ones.

- Flag results that may be legal liabilities, brand risks, or ethical red flags.

And make sure logs are part of the story.

You Must Log AI Requests and Responses

Seriously. If your app calls a large language model (LLM) and someone gets a racial slur, offensive content, or dangerous advice—you need proof:

- What was the input?

- What did the AI respond with?

- When did it happen?

- Which user triggered it?

Microsoft developers have been logging for decades. Logging requests and responses from AI systems should be second nature. Without that, you have no defense.

With logs, you can show the root cause—and prove it wasn’t your intent.

AI Can Help You Detect AI’s Biases

Here’s the irony: AI can be the solution to its own problem.

- Use AI tools to generate test cases that detect bias.

- Use AI to research how discrimination appears in decision-making.

- Use LLMs to identify risky phrasing or inconsistent responses.

AI isn’t just the risk—it can also be the defense.

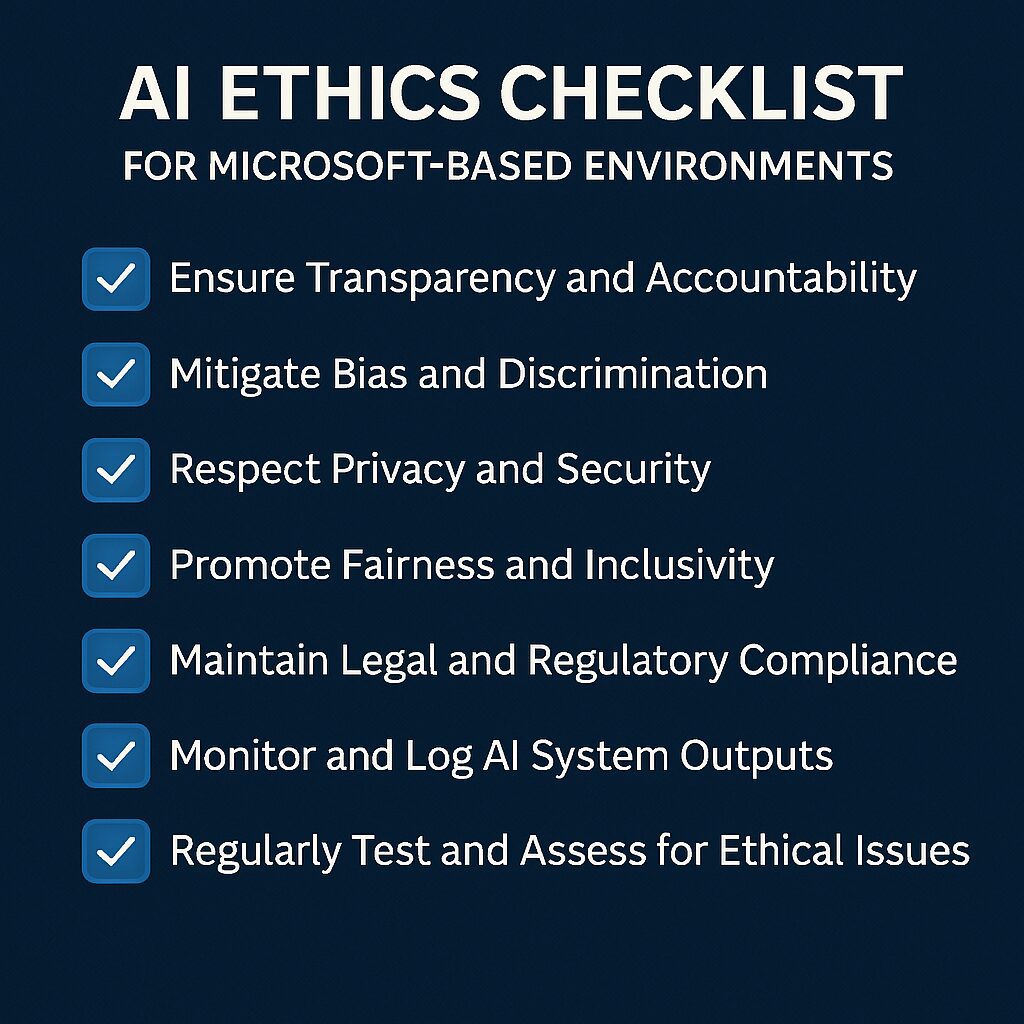

The AI Ethics Checklist for Microsoft-Based QA Teams

QA teams in Microsoft shops should begin incorporating these items into their test protocols:

| ✅ Ethics QA Item | 🔧 Description |

|---|---|

| Input/Output Disparity Testing | Do similar inputs produce biased outputs when demographics change? |

| Protected Class Regression Tests | Do race, gender, or disability-related changes alter AI-driven decisions? |

| Intentional Prompt Attacks | Can users coax unsafe, illegal, or unethical content from LLMs? |

| Decision Consistency Audits | Are two similar applicants treated differently without justification? |

| Legal Team Review of High-Risk Scenarios | Has legal reviewed business logic involving protected classes or pricing? |

| Logging of AI Inputs and Outputs | Are all requests and responses logged for audit and defense? |

| Explainability Review | Can decisions be explained to a human in plain English? |

| Human Override Paths | Can humans override AI decisions where stakes are high? |

This AI ethics checklist for Microsoft developers and QA professionals will evolve over time—but this version covers 80% of real-world risks.

Final Word: If It Can’t Be Tested, It Shouldn’t Be Shipped

AI has changed what it means to ship code. If your system can make decisions, it needs to be able to explain them, defend them, and stand up to scrutiny.

That starts with QA. It continues with logs. It ends with accountability.

“You don’t have to get everything right. But you do have to prove you tried.”

And if you’re not testing your AI for ethics, fairness, and bias, you’re not just missing bugs—you’re flying ethically blind.

References

Why Logging and Exception Handling Matter in AI Systems

AI Ethics Checklist for Microsoft-Based Environments: A Production-Ready Guide

Want to stay ahead in applied AI?

📑 Access Free AI Resources:

- Download our free AI whitepapers to explore cutting-edge AI applications in business.

- Check out our free AI infographics for quick, digestible AI insights.

- Explore our books on AI and .NET to dive deeper into AI-driven development.

- Stay informed by signing up for our free weekly newsletter